On Thursday's Winter Solstice, I created a Horned God effigy for my synthetic Yule tree 🎄😈🎄😈🎄😈🎄😈🎄😈🎄😈🎄😈🎄😈🎄😈🎄😈🎄😈🎄😈🎄😈🎄😈🎄😈🎄😈🎄😈🎄😈

— oioiiooixiii (@oioiiooixiii) December 24, 2017

info: https://en.wikipedia.org/wiki/Horned_God

On Thursday's Winter Solstice, I created a Horned God effigy for my synthetic Yule tree 🎄😈🎄😈🎄😈🎄😈🎄😈🎄😈🎄😈🎄😈🎄😈🎄😈🎄😈🎄😈🎄😈🎄😈🎄😈🎄😈🎄😈🎄😈

— oioiiooixiii (@oioiiooixiii) December 24, 2017

#!/bin/bash

# Temporal slice-stacking effect with FFmpeg (aka 'wibbly-wobbly' video).

# See 'NOTES' at bottom of script.

# Ver. 2017.10.01.22.14.08

# source: http://oioiiooixiii.blogspot.com

function cleanUp() # tidy files after script termination

{

rm -rf "$folder" \

&& echo "### Removed temporary files and folder '$folder' ###"

}

trap cleanUp EXIT

### Variables

folder="$(mktemp -d)" # create temp work folder

duration="$(ffprobe "$1" 2>&1 | grep Duration | awk '{ print $2 }')"

seconds="$(echo $duration \

| awk -F: '{ print ($1 * 3600) + ($2 * 60) + $3 }' \

| cut -d '.' -f 1)"

fps="$(ffprobe "$1" 2>&1 \

| sed -n 's/.*, \(.*\) fps,.*/\1/p' \

| awk '{printf("%d\n",$1 + 0.5)}')"

frames="$(( seconds*fps ))"

width="640" # CHANGE AS NEEDED (e.g. width/2 etc.)

height="360" # CHANGE AS NEEDED (e.g. height/2 etc.)

### Filterchains

stemStart="select=gte(n\,"

stemEnd="),format=yuv444p,split[horz][vert]"

horz="[horz]crop=in_w:1:0:n,tile=1x${height}[horz]"

vert="[vert]crop=1:in_h:n:0,tile=${width}X1[vert]"

merge="[0:v]null[horz];[1:v]null[vert]"

scale="scale=${width}:${height}"

#### Create resized video, or let 'inputVideo=$1'

clear; echo "### RESIZING VIDEO (location: $folder) ###"

inputVideo="$folder/resized.mkv"

ffmpeg -loglevel debug -i "$1" -vf "$scale" -crf 10 "$inputVideo" 2>&1 \

|& grep 'frame=' | tr \\n \\r; echo

### MAIN LOOP

for (( i=0;i<"$frames";i++ ))

do

echo -ne "### Processing Frame: $i of $frames ### \033[0K\r"

ffmpeg \

-loglevel panic \

-i "$inputVideo" \

-filter_complex "${stemStart}${i}${stemEnd};${horz};${vert}" \

-map '[horz]' \

-vframes 1 \

"$folder"/horz_frame${i}.png \

-map '[vert]' \

-vframes 1 \

"$folder"/vert_frame${i}.png

done

### Join images (optional sharpening, upscale, etc. via 'merge' variable)

echo -ne "\n### Creating output videos ###"

ffmpeg \

-loglevel panic \

-r "$fps" \

-i "$folder"/horz_frame%d.png \

-r "$fps" \

-i "$folder"/vert_frame%d.png \

-filter_complex "$merge" \

-map '[horz]' \

-r "$fps" \

-crf 10 \

"${1}_horizontal-smear.mkv" \

-map '[vert]' \

-r "$fps" \

-crf 10 \

"${1}_verticle-smear.mkv"

### Finish and tidy files

exit

### NOTES ######################################################################

# The input video is resized to reduce frames needed to fill frame dimensions

# (which can produce more interesting results).

# This is done by producing a separate video, but it can be included at the

# start of 'stemStart' filterchain to resize frame dimensions on-the-fly.

# Adjust 'width' and 'height' for alternate effects.

# For seamless looping, an alternative file should be created by looping

# the desired section of video, but set the number of processing frames to

# original video's 'time*fps' number. The extra frames are only needed to fill

# the void [black] area in frames beyond loop points.

download: ffmpeg_wobble-video.sh

2012: eagerly wait for NECA to bring out a Elizabeth Shaw figure

— oioiiooixiii (@oioiiooixiii) September 8, 2017

2017: character unceremoniously killed off - NECA bring out a Shaw figure 😒 pic.twitter.com/Utfzjyn5OM

#!/bin/bash

# Generate ['Scanimate' inspired] rainbow trail video effect with FFmpeg

# (N.B. Resource intensive - consider multiple passes for longer trails)

# version: 2017.08.08.13.47.31

# source: http://oioiiooixiii.blogspot.com

function rainbowFilter() #1:delay 2:keytype 3:color 4:sim val 5:blend 6:loop num

{

local delay="PTS+${1:-0.1}/TB" # Set delay between video instances

local keyType="${2:-colorkey}" # Select between 'colorkey' and 'chromakey'

local key="0x${3:-000000}" # 'key colour

local chromaSim="${4:-0.1}" # 'key similarity level

local chromaBlend="${5:-0.4}" # 'key blending level

local colourReset="colorchannelmixer=2:2:2:2:0:0:0:0:0:0:0:0:0:0:0:0

,smartblur"

# Reset colour after each colour change (stops colours heading to black)

# 'smartblur' to soften edges caused by setting colours to white

# Array of rainbow colours. Ideally, this could be generated algorithmically

local colours=(

"2:0:0:0:0:0:0:0:2:0:0:0:0:0:0:0" "0.5:0:0:0:0:0:0:0:2:0:0:0:0:0:0:0"

"0:0:0:0:0:0:0:0:2:0:0:0:0:0:0:0" "0:0:0:0:2:0:0:0:0:0:0:0:0:0:0:0"

"2:0:0:0:2:0:0:0:0:0:0:0:0:0:0:0" "2:0:0:0:0.5:0:0:0:0:0:0:0:0:0:0:0"

"2:0:0:0:0:0:0:0:0:0:0:0:0:0:0:0"

)

# Generate body of filtergraph (default: 7 loops. Also, colour choice mod 7)

for (( i=0;i<${6:-7};i++ ))

{

local filter=" $filter

[a]$colourReset,

colorchannelmixer=${colours[$((i%7))]},

setpts=$delay,

split[a][c];

[b]colorkey=${key}:${chromaSim}:${chromaBlend}[b];

[c][b]overlay[b];"

}

printf "split [a][b];${filter}[a][b]overlay"

}

ffmpeg -i "$1" -vf "$(rainbowFilter)" -c:v huffyuv "${1}_rainbow.avi"

download: ffmpeg_rainbow-trail.sh

#!/bin/bash

# Extract section of video using time-codes taken from MPV screen-shots

# Requires specific MPV screen-shot naming scheme: screenshot-template="%f__%P"

# N.B. Skeleton script demonstrating basic operation

filename="$(ls -1 *.jpg | head -1)"

startTime="$(cut -d. -f-2 <<< "${filename#*__}")"

filename="${filename%__*}"

endTime="$(cut -d_ -f3 <<<"$(ls -1 *.jpg | tail -1)" | cut -d. -f-2)"

ffmpeg \

-i "$filename" \

-ss "$startTime" \

-to "$endTime" \

"EDIT__${filename}__${startTime}-${endTime}.${filename#*.}"

Another approach to this (and perhaps more sensible) is to script it all through MPV itself. However, that ties the technique down to MPV, whereas, this 'screen-shot' idea allows it to be used with other media players offering timestamps in the filename. Also, it's a little more tangible: you can create a series of screen-shots and later decide which ones are timed better.

'mpv-webm: Simple WebM maker for mpv, with no external dependencies.' https://github.com/ekisu/mpv-webm Create video clip at selected start/end points, as well as crop coordinates if required. Encoder options, and ability to Preview before output.

— oioiiooixiii (@oioiiooixiii) September 11, 2018

From the archives: Circa 2002, Euro conversion calculator produced for 'Denny' (processed meats). Given away for free* on selected products. pic.twitter.com/7ap4bW15xR— oioiiooixiii (@oioiiooixiii) May 4, 2017

Wouldn't turn on, no matter how much light given to solar panel ["perhaps the battery is acting as resister?" I thought]. Opened it; found a wonderful surprise [solar panel is a fake]. pic.twitter.com/cetu2xPdeF— oioiiooixiii (@oioiiooixiii) May 4, 2017

# A simple 'youtube-dl' one-liner that can replace everything else the script youtube-dl "$1" -f 'bestvideo+bestaudio' -o "%(channel_id)s---%(id)s---%(title)s.%(ext)s"

#!/bin/bash

################################################################################

# Download YouTube video, adding 'channel ID' to downloaded video filename¹

# - Arguments: YouTube URL

# source: https://oioiiooixiii.blogspot.com

# version: 2017.11.26.00.04.10

# - Fixed filename issue where youtube-dl uses "mkv" container

# ------- 2017.11.11.15.19.06

# - Changed 'best (mp4)' to best anything (for vp9 4K video)

# ------- 2017.08.05.22.50.15

################################################################################

# Checks if video already exists in folder (checks YouTube ID in filename)

[ -f *"${1##*=}.m"* ] \

&& echo "*** FILE ALREADY EXISTS - ${1##*=} ***" \

&& exit

# Download html source of YouTube video webpage

html="$(wget -qO- "$1")"

# Extract YouTube channel ID from html source

channelID="$(grep channelId <<<"$html" \

| tr \" \\n \

| grep -E UC[-_A-Za-z0-9]{21}[AQgw])"

# Download best version of YouTube video

youtube-dl -f 'bestvideo+bestaudio' \

--add-metadata \

"$1"

# Download best (MP4) version of YouTube video

#youtube-dl -f 'bestvideo[ext=mp4]+bestaudio[ext=m4a]/best[ext=mp4]/best' \

# --add-metadata \

# "$1"

# Get filename of video created by youtube-dl

filename="$(find . -maxdepth 1 -name "*${1##*=}.m*" \

| cut -d\/ -f2)"

# Rename filename

echo "Renaming file to: ${channelID}_${filename}"

mv "$filename" "${channelID}_${filename}"

### NOTES ######################################################################

# ¹2017, May 21: Waiting for this to be implemented in youtube-dl

# youtube-dl -v -f137+140 -o '%(channel_id)s-%(title)s-%(id)s.%(ext)s'

# https://github.com/rg3/youtube-dl/issues/9676

download: ytdl.sh

I had this mad idea of limiting myself to 'x11-apps' https://t.co/AgdyjrGqfC pic.twitter.com/nsuSY3UVaG

— oioiiooixiii (@oioiiooixiii) February 28, 2017

YouTube 2017 https://t.co/jlctMmDH0Q #MommyandGracieShow pic.twitter.com/Jh9FJKmrK7

— oioiiooixiii {gifs} (@oioiiooixiii_) April 29, 2017

# Some basic Bash/FFmpeg notes on the procedures involved:

# Select random 144 videos from current folder ('sort -R' or 'shuf')

find ./ -name "*.mp4" | sort -R | head -n 144

# Generate 144 '-i' input text for FFmpeg (files being Bash function parameters)

echo '-i "${'{1..144}'}"'

# Or use 'eval' for run-time creation of FFmpeg command

eval "ffmpeg $(echo '-i "${'{1..144}'}"')"

# VIDEO - 10 separate FFmpeg instances

# Create 9 rows of 16 videos with 'hstack', then use these as input for 'vstack'

[0:v][1:v]...[15:v]hstack=16[row1];

[row1][row2]...[row9]vstack=9

# [n:v] Input sources can be omitted from stack filters if all '-i' files used

# AUDIO - 1 FFmpeg instance

# Mix 144 audio tracks into one output (truncate with ':duration=first' option)

amix=inputs=144

# If needed, normalise audio volume in two passes - first analyse audio

-af "volumedetect"

# Then increase volume based on 'max' value, such that 0dB not exceeded

-af "volume=27dB"

# Mux video and audio into one file

ffmpeg -i video.file -i audio.file -map 0:0 -map 1:0 out.file

# Addendum: Some other thoughts in reflection: Perhaps piping the files to a FFmpeg instance with a 'grid' filter might simplify things, or loading the files, one by one, inside the filtergraph via 'movie=' might be worth investigating.

¹ See related: https://oioiiooixiii.blogspot.com/2017/01/ffmpeg-generate-image-of-tiled-results.html#!/bin/bash

# Create Predator [1987 movie] "Adaptive Camo" chromakey effect in FFmpeg

# - Takes arguments: filename, colour hex value (defaults to green).

# ver. 2017.06.25.16.29.43

# source: http://oioiiooixiii.blogspot.com

function setDimensionValues() # Sets global size variables based on file source

{

dimensions="$(\

ffprobe \

-v error \

-show_entries stream=width,height \

-of default=noprint_wrappers=1 \

"$1"\

)"

# Create "$height" and "$width" var vals

eval "$(head -1 <<<"$dimensions");$(tail -1 <<<"$dimensions")"

}

function buildFilter() # Builds filter using core filterchain inside for-loop

{

# Set video dimensions and key colour

setDimensionValues "$1"

colour="0x${2:-00FF00}"

oWidth="$width"

oHeight="$height"

# Arbitary scaling values - adjust to preference

for ((i=0;i<4;i++))

{

width="$((width-100))"

height="$((height-50))"

printf "split[a][b];

[a]chromakey=$colour:0.3:0.06[keyed];

[b]scale=$width:$height:force_original_aspect_ratio=decrease,

pad=$oWidth:$oHeight:$((width/4)):$((height/4))[b];

[b][keyed]overlay,"

}

printf "null" # Deals with hanging , character in filtergraph

}

# Generate output

ffplay -i "$1" -vf "$(buildFilter "$@")"

#ffmpeg -i "$1" -vf "$(buildFilter "$@")" -an "${1}_predator-fx.mkv"

video source: https://www.youtube.com/watch?v=7UdhuPnWpHA

I wrote a Bash function for highly accurate Irish weather forecasting... pic.twitter.com/BhP6jztDuq

— oioiiooixiii (@oioiiooixiii) June 10, 2017

function weather2morrow()

{

echo "Weather forecast for"\

"$(date +%A,\ %d\ %B\ %Y --date=tomorrow):"\

"Sunny spells & scattered showers 🌦 "

}

Aug. 5, 1996: TIME Magazine, "Letters". Amer Matar writes in regards to TIME's July 15th issue, covering US influence on Russian elections. pic.twitter.com/6r244FzqZ5

— oioiiooixiii (@oioiiooixiii) May 22, 2017

Music: Magma - 'Slag Tanz'. Dance: MacMillan's "Rite of Spring" - English National Ballet (2012). Principal: Erina Takahashi. pic.twitter.com/p3K2cxETNd— oioiiooixiii {gifs} (@oioiiooixiii_) March 20, 2017

Listening to Magma often gives me ideas for dance. I dislike sticking things together (spoils both) but gives some idea of what's in my head https://t.co/CvhBEFj0n6— oioiiooixiii (@oioiiooixiii) March 20, 2017

source video: https://www.youtube.com/watch?v=GEOi4ZzUud4Costume design by Kinder Aggugini, with additional development by Katya Ryazanskaya https://t.co/a9bV5iD4RL @oioiiooixiii_ pic.twitter.com/ak0cYeA8ul— oioiiooixiii (@oioiiooixiii) March 20, 2017

# Use ImageMagick to convert image to text file

convert merkel.jpg merkel.txt

# sed replaces every occurrence of $i value with '0' (except in first line)

for (( i=0; i<255; i++))

{

sed '1! s,'"$i"',0,g' < merkel.txt \

| convert - "merkel_$i.png"

}

Animated effects can also be interesting...

for (( i=0; i<186; i++)); { sed '1! s,'"$i"',0,g' <merkel.txt | convert - "merkel_$(printf "%03d\n" $i).png"; } # @oioiiooixiii pic.twitter.com/TcfBFity6Y # — oioiiooixiii {gifs} (@oioiiooixiii_) December 7, 2016

For no other reason than "just because". The Bash script is generic enough to be used in other scenarios. Most work is done by MPV and its '--ytdl-format' option. A small delay is added before each mpv call, to avoid swamping YouTube with concurrent video requests.Watching 5 QVC YouTube livestreams at once because: we are living in the future! Might not be the future we wanted but its the future we got — @oioiiooixiii February 11, 2017

#!/bin/bash

# Stream 5 QVC YouTube live-streams simultaneously.

# - Requires 'mpv' - N.B. Kills all running instances of mpv when exiting.

# - See YouTube format codes, for video quality, below.

# ver. 2017.02.11.21.46.52

### YOUTUBE VIDEO IDS ##########################################################

# QVC ...... USA .......... UK ........ Italy ....... Japan ...... France

videos=('2oG7ZbZnTcA' '8pHCfXXZlts' '-9RIKfrDP2E' 'wMo3F5IouNs' 'uUwo_p57g5c')

### FUNCTIONS ##################################################################

function finish() # Kill all mpv players when exiting

{

killall mpv

}

trap finish EXIT

function playVideo() # Takes YouTube video ID

{

sleep "$2" # The "be nice" delay

mpv --quiet --ytdl-format 91 https://www.youtube.com/watch?v="$1"

}

### BEGIN ######################################################################

for ytid in "${videos[@]}"; do ((x+=2)); (playVideo "$ytid" "$x" &); done

read -p "Press Enter key to exit"$'\n' # Hold before exiting

#zenity --warning --text="End it all?" --icon-name="" # Zenity hold alternative

exit

### FORMAT CODES ###############################################################

# format code extension resolution note

# 91 mp4 144p HLS , h264, aac @ 48k

# 92 mp4 240p HLS , h264, aac @ 48k

# 93 mp4 360p HLS , h264, aac @128k

# 94 mp4 480p HLS , h264, aac @128k

# 95 mp4 720p HLS , h264, aac @256k

# 96 mp4 1080p HLS , h264, aac @256k

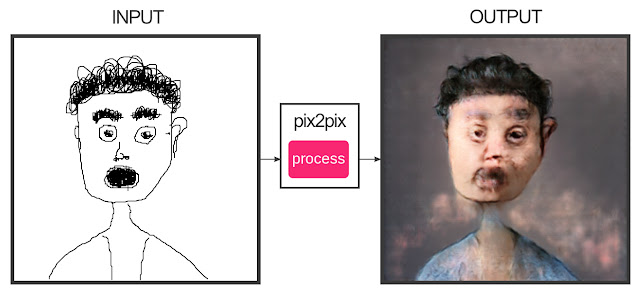

Look, I sketched Trump and Obama shaking hands at the inauguration ceremony. pic.twitter.com/4oQt5Fd9iy

— oioiiooixiii (@oioiiooixiii) January 20, 2017

And now I sketched the First Lady Woman of Amerika. I was a few minutes in before I realised I was taking it too seriously pic.twitter.com/coFmskpmHJ

— oioiiooixiii (@oioiiooixiii) January 21, 2017

# ImageMagick - Use with extracted frames or FFmpeg image pipe (limited to 4GB) convert -limit memory 4GB frames/*.png -evaluate-sequence max merged-frames.png # FFmpeg - Chain of tblend filters (N.B. inefficient - better ways to do this) ffmpeg -i video.mp4 -vf tblend=all_mode=lighten,tblend=all_mode=lighten,...As a comparison, here is an image made from the same frames but using 'mean' average with ImageMagick.